The New AR Library which provides us the various capability to detect a 2D/3D image, Object detection and multiuser AR related capability where many users can play the 3D game at a time in the real world. For every AR capture, there will be AR session maintain in application logic to capture the 3D object detection. Once the AR session, starts then it will create the AR Reference object which can be saved and load for another AR session. AR provides a lot of other capabilities like loading the 3D world map in ARWorldMap, detecting 3D text and tagging the text also. There is a limited number of library support which can detect 3D modeling and object detection. Apart from the AR library, another library is quite difficult to add with iOS app development services and leverage the features.

Prerequisites for AR library and 3D object detection:

- XCode 10 (beta or above).

- iOS 12 (beta or above).

- iPhone 6S (Apple A9 chip or above) or iPad with AR-enabled device.

- Object reference files with a different supported format.

There are few mandatory steps which need to follow during 3D object detection and Recognition:

Rule 1:

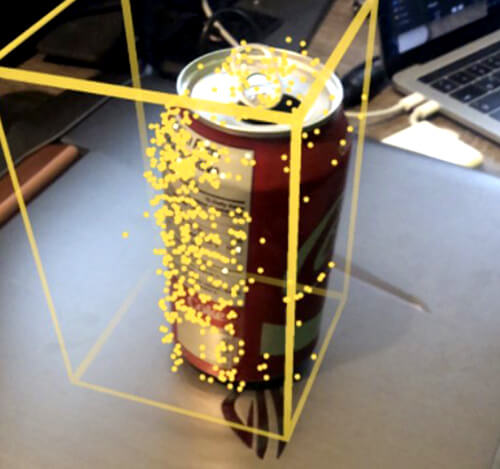

Developer or Users need to, object at the center of camera So that object can be detected correctly, and dimension will be visible in the camera view more accurately. Once it detected then dimension, will be captured properly.

Rule 2:

The developer needs, to create the box area where the object can capture and dimension can be calculated properly. An object shouldn’t be outside the box view camera otherwise, it will be imperfect dimension captured.

Rule 3:

A developer needs to create the coordinate axes for 3D object capture and create some anchor points which can be relative to the origin. If the developer required some specific format like USDZ then developer need to create an extra layer with of button which will detect the AR model in the real world. Once the developer clicks on the button then immediately adjust the size inside the box, and it will display the accurate real-world 3D object.

Rule 4:

Developer need to create some ARRefrenceObject which will create the 3D object with size and orientation detection. Orientation can detect with the AR model with exact size and layout in the box. Exact position and dimension can recognize with the help of AR library.

Implementation for 3D Object Detection in iOS Application:

1. A developer needs to get the AR object during the AR session then need to upload the relative objects which need to detect as AR Reference Object. After, that AR Reference object detects the Objects features of AR World Tracking ArRelativeConfig and pass it to the AR session, with a different configuration.

2. A developer needs to implement ARKit and then detect the relative objects with session captured. The created session need to capture in the corresponding anchors with ARObjectAnchor. The implementation of the AR session, ARSCN, and ARSK delegate to added in the anchor with ARObjectAnchor. A new session can also create the corresponding anchor.

3. A developer needs to add a relative object to encode the slice of the internal data mapping with ARKit, and it can capture the device orientation, dimension, and mapping coordinates. If a developer needs to get a high level of data, then the developer needs to scan an object with a proper session using property AR Object Scanning Configuration.

4. Once developer detects the object and Object-scanning AR session done, then the developer needs to collect enough all space data to recognize it. After scanning developer needs to make a call for, create reference Object to detect the ARRefrenceObject by making an appropriate region for the detection.

Sample ScreenOnce App developed for 3D Object Detection